Overview of our two-stage fine-tuning strategy. We run prompt-tuning at

4.8 (350) · $ 22.50 · In stock

The ELI5 Guide to Prompt Injection: Techniques, Prevention Methods

SELF-ICL Zero-Shot In-Context Learning with Self-Generated Demonstrations (NTU 2_哔哩哔哩_bilibili

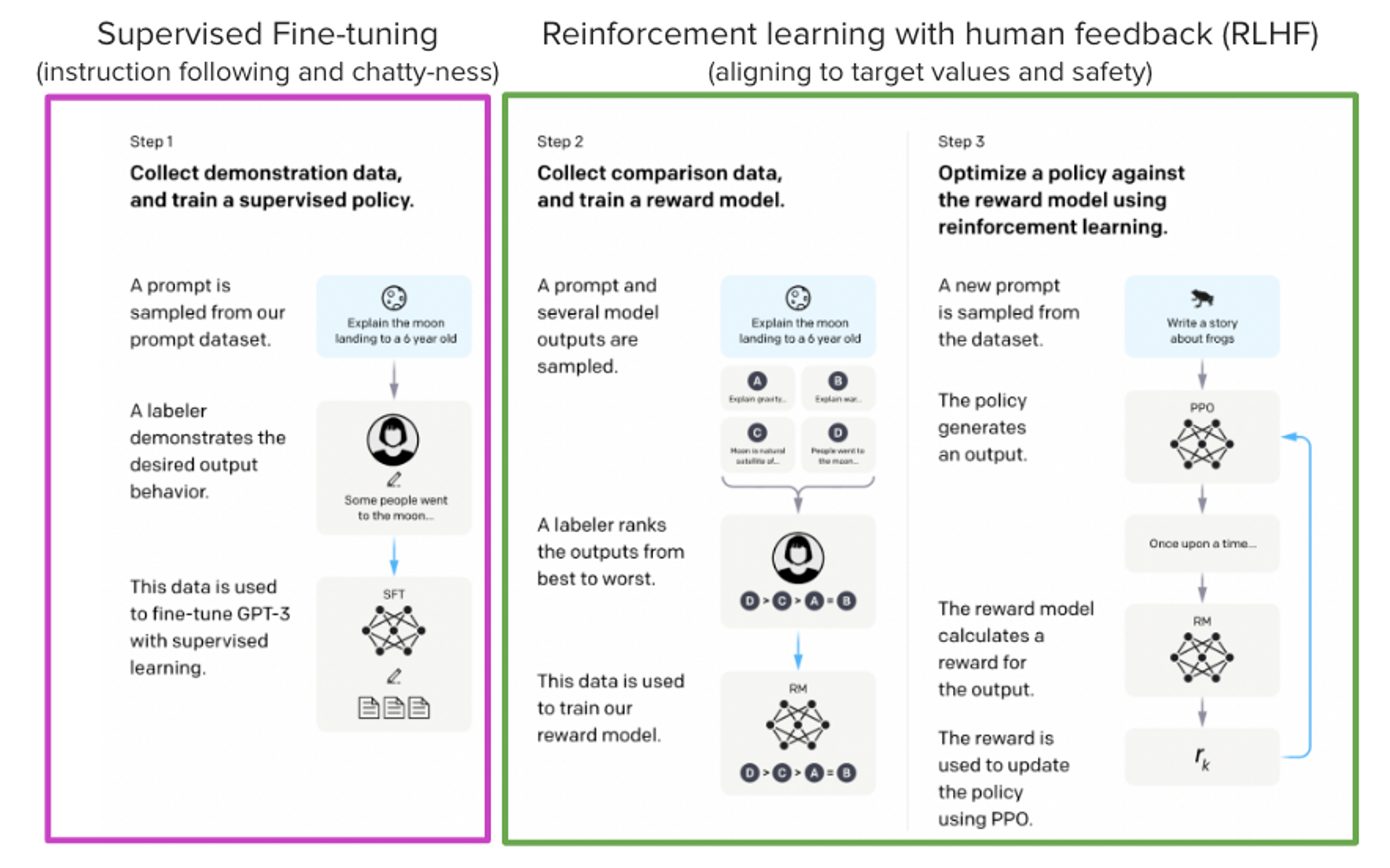

StackLLaMA: A hands-on guide to train LLaMA with RLHF

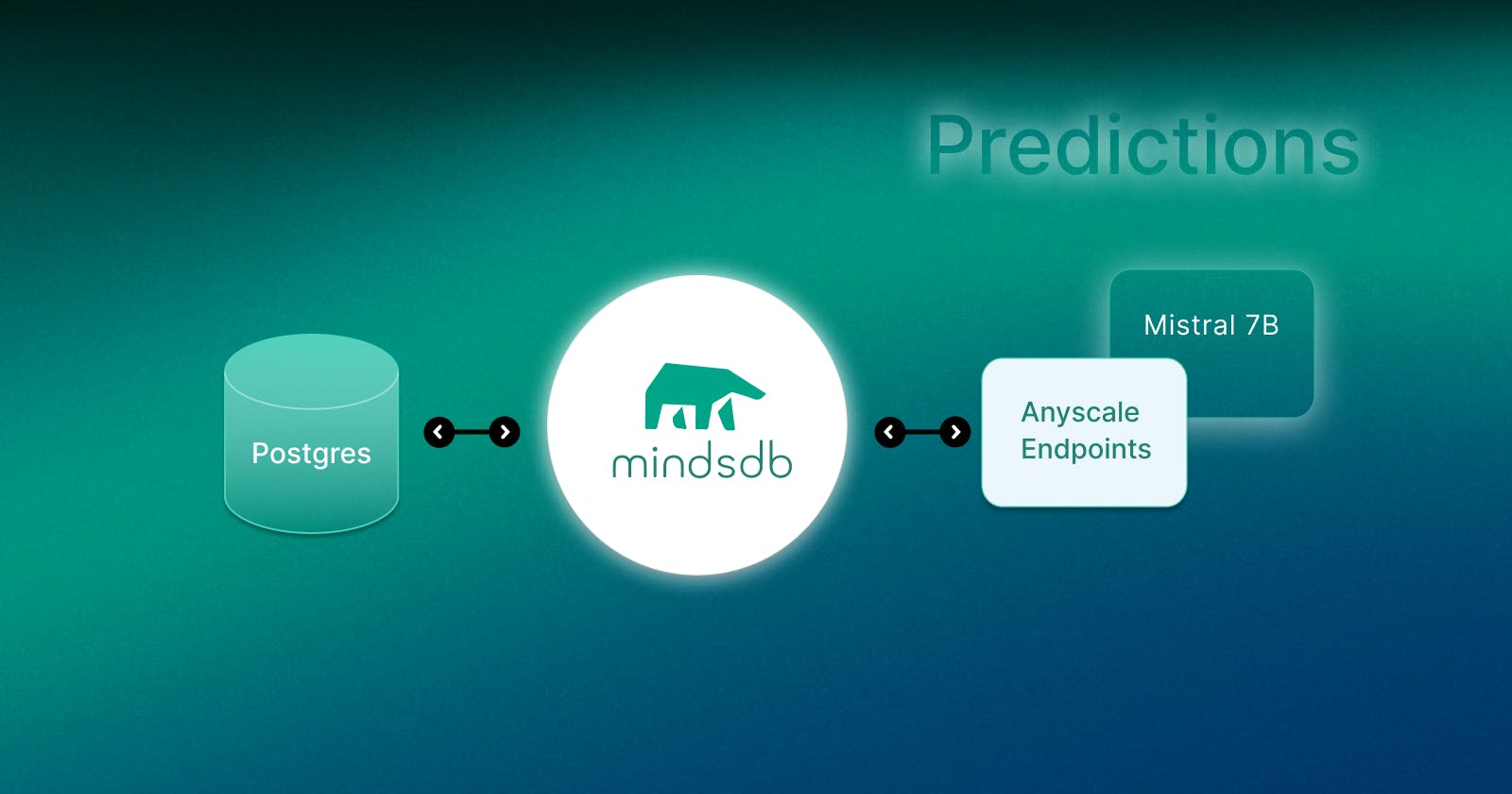

How to Fine-Tune an AI Model in MindsDB Using Anyscale Endpoints

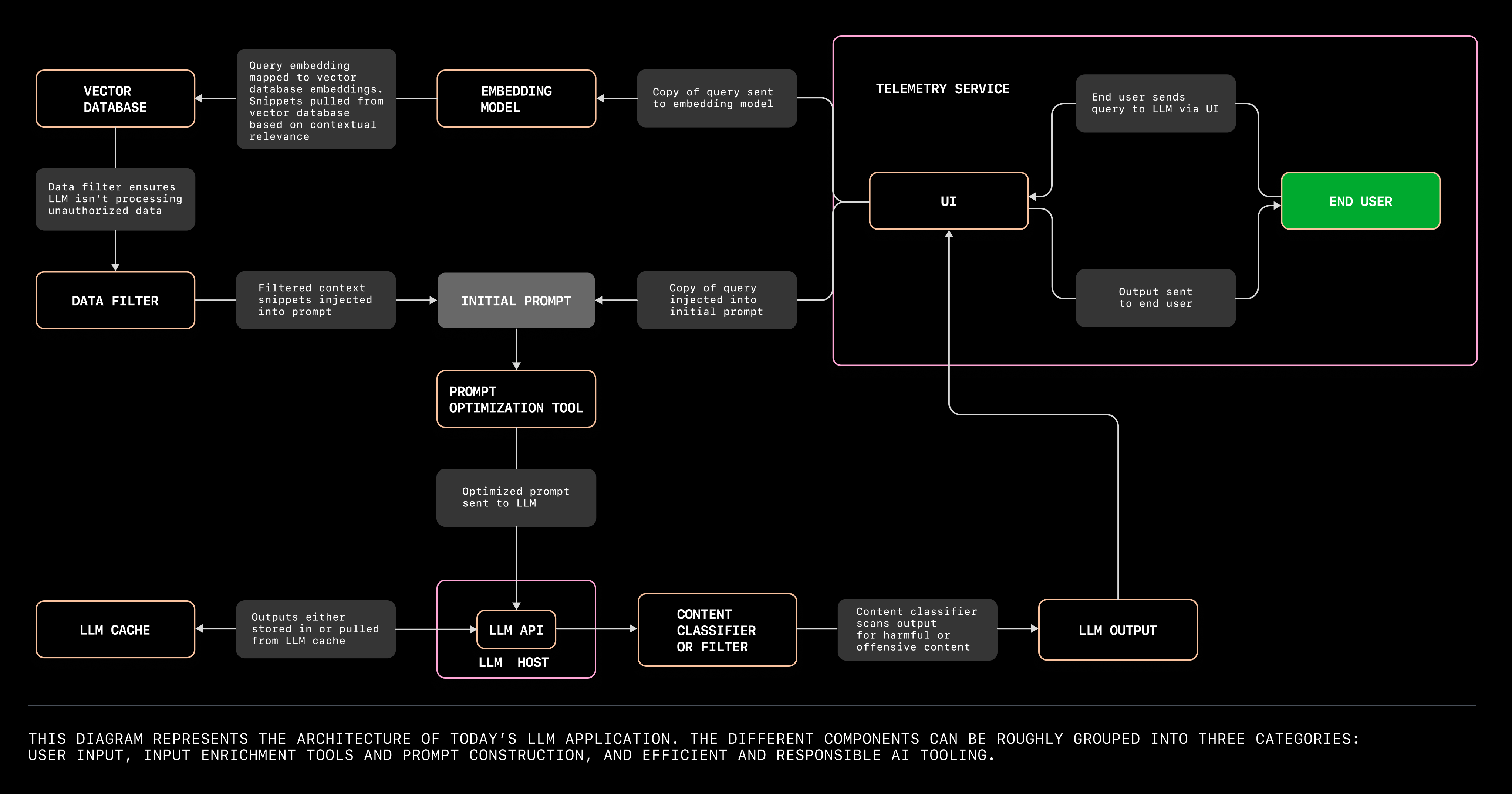

The architecture of today's LLM applications - The GitHub Blog

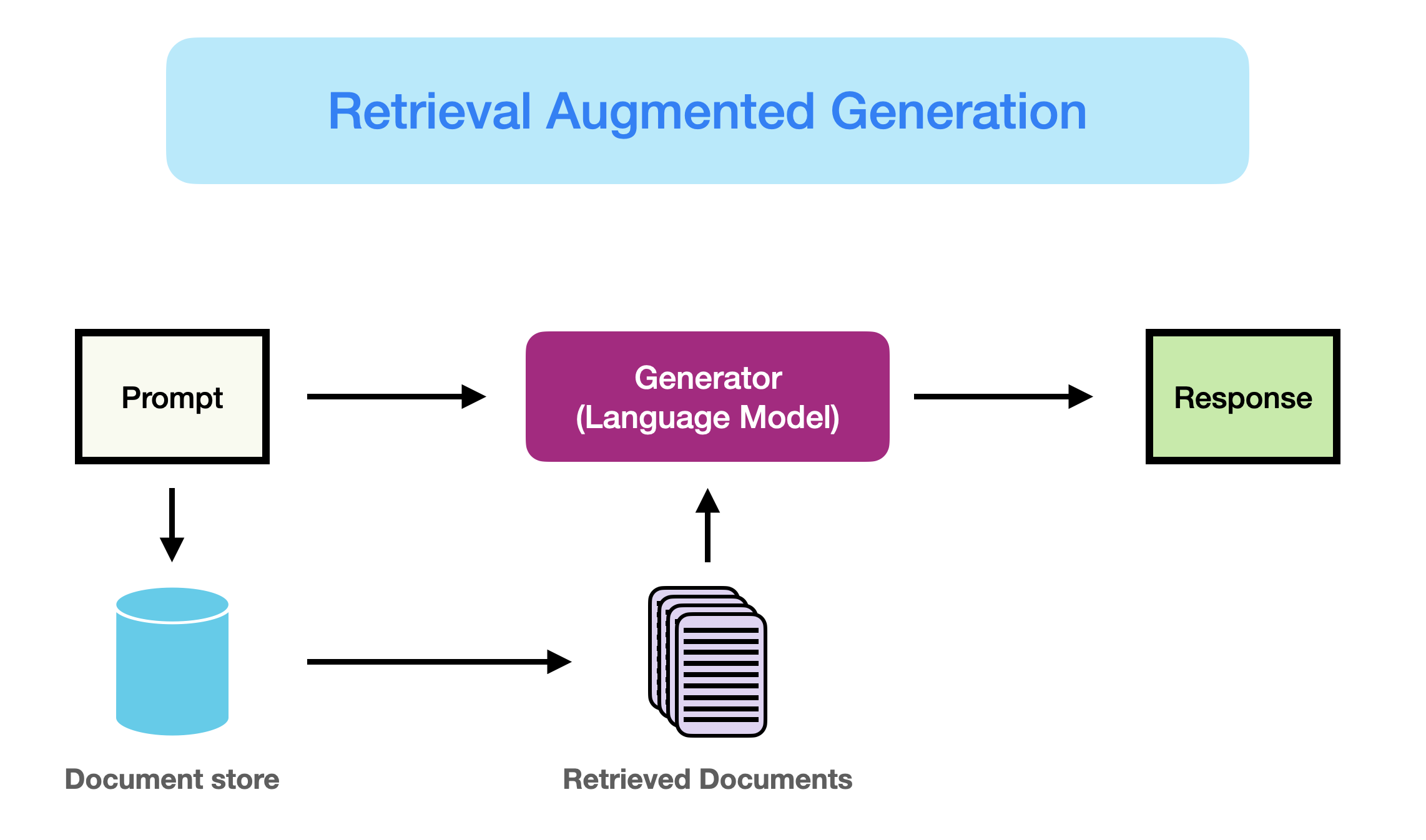

Retrieval Augmented Generation (RAG) for LLMs

Cho-Jui HSIEH, University of Texas at Austin, TX, UT, Department of Computer Science

Fine Tuning vs. Prompt Engineering Large Language Models •

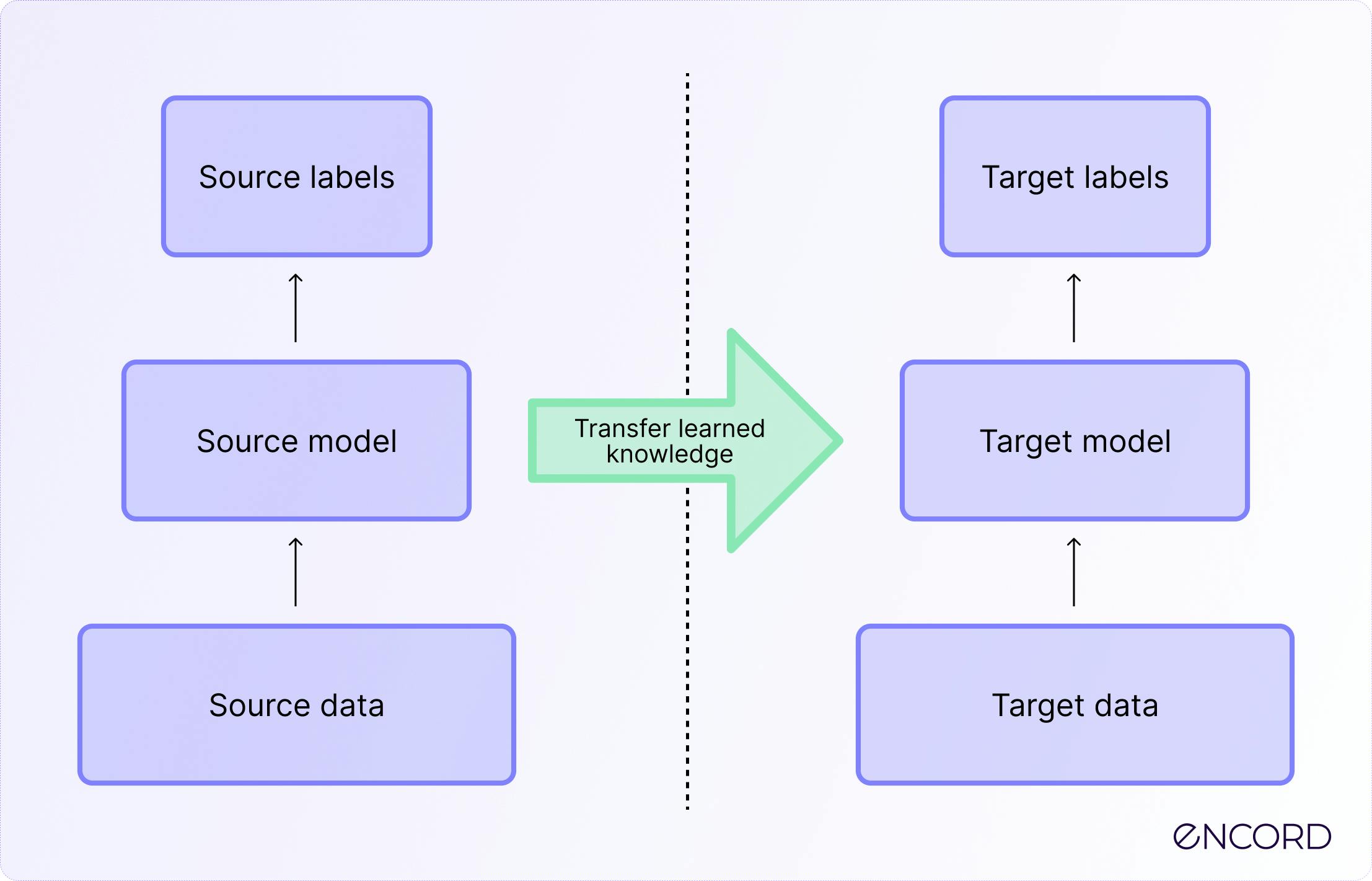

Transfer Learning: Definition, Tutorial & Applications